Between Humans and Apes: A New Face Database

Researchers from the Center for Language Evolution Studies (CLES) at Nicolaus Copernicus University in Toruń are co-authors of the Apemen Faces Database (ApeFD), a pioneering collection of photorealistic facial images to enhance research into human evolution, cognition, and social perception.

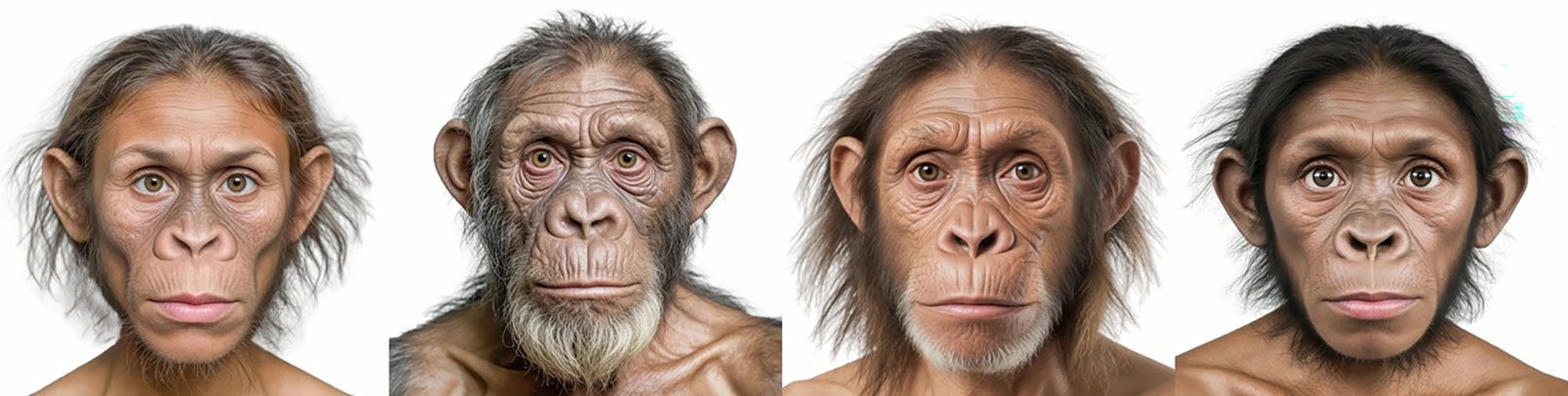

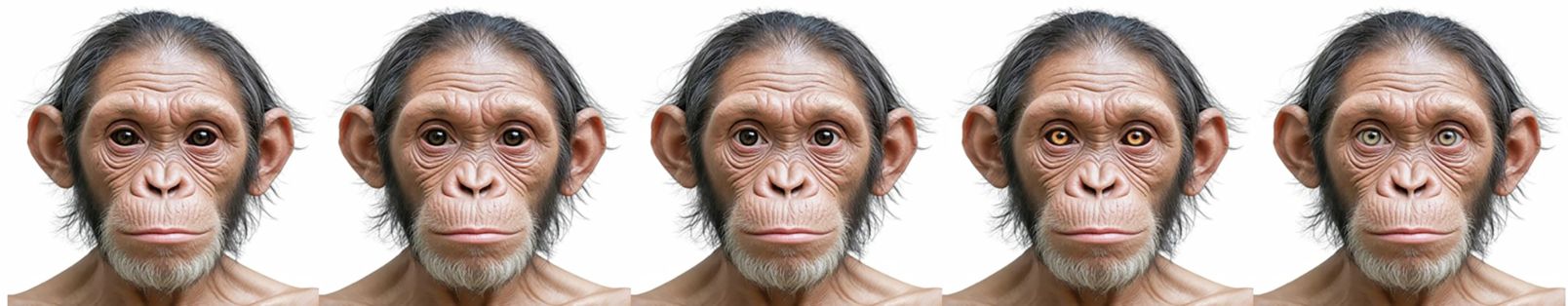

The ApeFD is an innovative dataset comprising 620 stunning, meticulously crafted images of 31 generalized hominin models (importantly: not reconstructions of any actual hominin species), presented in a variety of eye color variants that bridge the visual gap between modern humans and our ancient relatives. Each face is not only visually immersive but also paired with detailed geometric morphometric data and rich perceptual ratings on key social traits such as threat, sociability, trustworthiness, health, age, and masculinity. The dataset was developed through the collaboration of Centre for Language Evolution Studies Nicolaus Copernicus University in Toruń (dr hab. Sławomir Wacewicz, prof. NCU, dr Juan Olvido Perea Garcia, dr Marta Sibierska, dr Vojtěch Fiala and Anna Szala) with researches at the Wroclaw University of Health and Sport Sciences, Hirszfeld Institute of Immunology and Experimental Therapy, and University of Las Palmas de Gran Canaria.

The ApeFD was presented in the journal "Scientific Data".

photo Scientific Data

How the images were made

The team developed the collection through a sophisticated blend of advanced automatic tools with manual artistry. Initial images were generated with cutting-edge AI systems including Midjourney and GetImg.ai, and final renders were created using the RealVisXL V4.0 model.

Post-processing involved hundreds of hours of manual refinement by an experienced graphic artist (upsampling, texture work, standardized lighting, passport-style framing and careful eye edits) to ensure consistent, photorealistic results – says dr hab. Sławomir Wacewicz, NCU professor, director of Centre for Language Evolution Studies NCU.

From roughly 7,000 generated candidates the authors selected and polished 31 final faces that meet their “humanlike but not human" design goal.

Why this matters

What sets ApeFD apart is its unique design philosophy. The existing face databases use pictures of real people – a good approach for typical research questions, but limiting if you want to test how people respond to face shapes outside modern human variation. ApeFD fills that gap by offering realistic, non-human faces that still invite social judgments (e.g., “trustworthy?" or “threatening?").

photo Scientific Data

That means psychologists, neuroscientists, primatologists, and even people working in film, games, or design can run controlled experiments about gaze, eye coloration, identity cues, the “uncanny valley", and ideas about how human eyes and faces evolved. For example, researchers can:

- test how different eye colorations and sclera shapes affect perceptions of trustworthiness, threat, or attention;

- run reaction-time and psychophysiology experiments (heart rate, skin-conductance, gaze tracking) without using identifiable human images; This is a way to circumvent a limitation in many studies on impression formation – when a trait is modified beyond what is known to subjects, their response may be due to the violation of expectations, rather than intrinsic properties of the trait itself;

- compare human and non-human primate responses to the same stimuli in zoo or laboratory settings.

Beyond academia, designers, filmmakers, and game developers can use ApeFD's editable masters to create lifelike faces while avoiding resemblance to real people. These practical uses help answer deeper scientific questions – for example, about the evolution of the cooperative-eye features that make human gaze especially readable.

NCU News

NCU News

Humanities and arts

Humanities and arts

Social sciences

Social sciences

Humanities and arts

Humanities and arts